In May 2016, a Tesla car in Florida hit a truck.

The truck was taking a turn. The Tesla was behind it.

The impact occurred at speed.

The front of the car hit the truck. The impact tore open the roof of the car.

The car continued to drive ahead after the impact, hit another object, and swerved to a halt along the highway.

The driver of the truck was not injured by the impact. The Tesla driver did not survive.

Investigation started. It was determined that the Tesla driver had switched on the autopilot feature.

The driver of the car had relied on the autopilot to drive. When the truck performed a wrong manoeuvre, the autopilot failed to brake or change lanes in time.

Tesla has introduced the world to the idea of self-driving cars. While the technology is not perfect, it has become shockingly good.

Self-driving cars can now do a majority of the driving and have shown the ability to avoid many mishaps that would have been hard for human drivers to avoid.

The magic behind this technology is called machine learning.

ALV — Autonomous Land Vehicle

Back in the 1980s, a team had been tasked by the US Govt (DARPA, to be specific) to try and build a self-driving vehicle.

The team was one of the first to attempt such a feat.

They did not have much time to build this. A vehicle was quickly put together. It was called the ALV — Autonomous Land Vehicle.

They were attempting to drive on a dirt road.

Their idea was they would use a camera to video-record the road. Then, a computer would segregate each pixel of the image based on its colour.

Green meant grass. Blue meant sky. Brown meant road.

It sounds quite simple. And in retrospect, it is simple.

Using this, they would get a rough idea of what the road ahead looked like. Once they knew the road ahead, driving on it was easy.

This system worked.

The vehicle was able to drive on the dirt track more or less accurately. The speed was only about 3 km/h.

They tried to scale this — increase speed and increase the variety of roads.

They hit a problem.

On an empty dirt road, the colours of each pixel can be relied upon. But in a more dynamic setting, the system would fail.

What if the road’s colour changed from brown to black? What if someone dropped a large blue-coloured bedsheet on the road?

The system would get confused.

They continued to improve and adapt the computer code, deciding what the camera saw ahead.

ALVINN — Autonomous Land Vehicle in a Neural Network

The major breakthrough came when a group at Carnegie Mellon University tried to build an autonomous vehicle.

After bumping across different kinds of ways to solve it, they found a potential solution.

Instead of trying to tell the computer what meant what, they decided to show it the right way to drive and let it figure out how to do it.

So, instead of telling it where the road was (eg, brown colour), they would drive wherever the road was first and then ask it to repeat the same.

The technology was called neural nets — a branch of machine learning.

So, now, they were not concerned with coding the machine. All they did was drive with the camera on. Then, that data was fed into the computer.

The computer would then try to replicate the same driving.

This worked.

In 1995, a professor, Dean Pomerleau, drove from the east of the US to the west in an autonomously driven vehicle. 98% of the driving was done by the system on its own.

The system was able to accurately make sense of the roads and turn accordingly.

But this system has challenges.

The biggest challenge was that it had to be “trained”.

For it to know what to do and what not to do, it needed to be shown all kinds of different scenarios.

Over the years, researchers drove more and used that data to train the system. The system kept improving and got better at driving.

But it still couldn’t drive like a human.

To get better, it needed more data.

Tesla, Waymo, & Others

Over the years, compute power improved dramatically.

Computers could process a lot more data, faster, and more energy efficiently.

The lack of data still remained.

In the 2000-2010 period, different companies started collecting more data for self-driving cars.

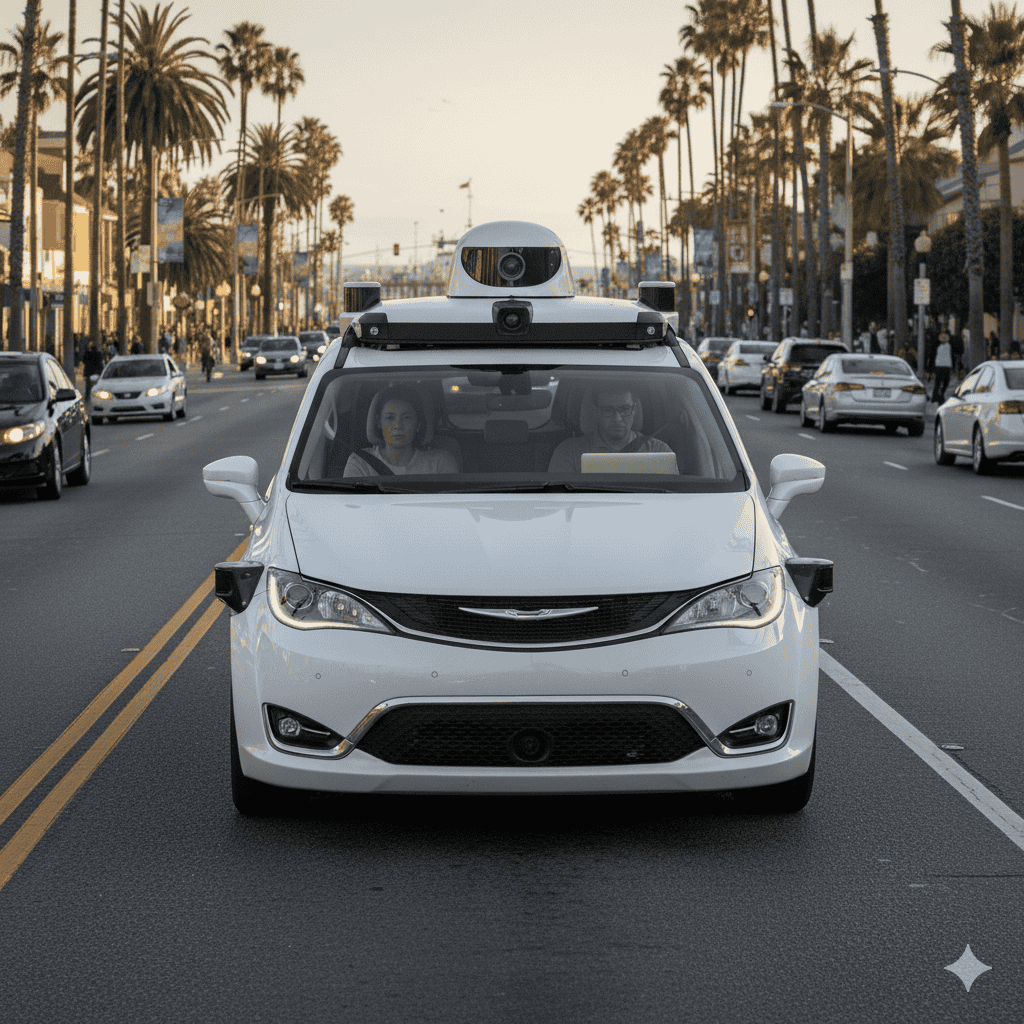

Google started the Waymo programme. The cars were owned by the company and driven across different cities in the US.

The programme ran for a couple of years and then started showing results.

Waymo launched its first driverless cab service in 2018.

Its approach was to make its cars drive across different cities, collecting data from onboard cameras, proximity sensors, and 3D mapping systems.

They felt that the variety of data would help the system learn better.

Tesla also started collecting data but in a more unique manner.

Their approach was to rely mostly on video camera data to train their system. They equipped the cars they were selling to the public with cameras.

This data was then used to train their system.

Towards the mid-2010s, Tesla gradually started rolling out self-driving capabilities.

Various other companies also started collecting data for their self-driving car programs — Cruise, Uber, Baidu, and others.

The challenge?

Despite the millions of kilometres’ worth of data collected, these cars are not fully reliable.

They are still making errors.

Some arguments in their favour suggest that the number of mistakes they commit is less than the mistakes human drivers make.

Which also seems true.

But the mistakes they make seem incredibly easily avoidable.

So, the question is, why do they commit such mistakes?

Training data.

The real world has so many incredibly unique situations. No system is trained on all of those situations.

Variables like traffic, weather, light, shadows, temperatures and wind movements, oddly shaped vehicles, etc. are all new data to the machine learning system.

The Tesla that crashed into the truck likely did so because that situation had not been incorporated in its training data. That and the fact that the driver failed to intervene on time.

When one of the systems encounters a situation it has not been trained on, it fails to work well.

This is a big drawback of these systems.

Investing & ML

All modern AI-based products work roughly like this. They rely on training data to predict output.

Even LLM products like ChatGPT and Gemini work on massive quantities of training data.

In investing too, there is a strategy called quant investing/trading.

These work in a similar fashion.

The traders feed vast quantities of historic data to a machine learning algorithm.

Then, they back-test and see if the machine learning model they trained gives favourable outputs.

What kind of historic data do they use?

Like in the case of self-driving cars, different investors have different ideas.

Training data can include obvious data like price history, trading volume, news, quarterly reports, market sentiments, consumption data, etc.

But many other kinds of data are also included by different fund managers.

Many of these data points are closely guarded secrets.

Other investors process information themselves and decide what to do. Quant investors rely heavily on their machine learning models to tell them what to do.

A huge part of their time goes into training these machine learning models.

And similar to self-driving cars, they can fail in a big way when faced with situations that have not happened in the past.

This is called overfitting — the models get excellent at data they are trained on and worse on situations they have no training data on.

Just like with self-driving cars, most quant investors do not rely on the models 100%. It’s a mix of both — machine judgement and human supervision.

And like in the case of both, training data is vital and ever-increasing.

How does this affect you as an individual investor?

What’s to note here is that investors invest in share markets. And the same markets have quant-based investors too.

It makes sense to know what others are doing and the potential impact it can have on the overall markets.

Quick Takes

+ The government has approved the first 7 companies under the ECMS (Electronics Component Manufacturing Scheme), bringing a combined investment of Rs 5,532 crore in the semiconductor and electronics manufacturing industry.

+ The IPO plans of Sterlite Electric, a Vedanta group company, have been put on hold by SEBI.

+ The central government approved the 8th Central Pay Commission to review pay and benefits for central government employees and pensioners within 18 months.

+ India’s industrial production rose 4% year-on-year in Sept (vs 4% in Aug). Manufacturing grew 4.8% (vs 3.8% in Aug).

+ The central government approved the nutrient-based subsidy rates for fertilizers for the Rabi 2025-26 season with an approximate cost of Rs 37,952.29 crore.

+ SEBI proposed changes to the mutual fund fee rules. It includes simpler fees, excluding taxes from costs, and lowering brokerage and transaction fees. SEBI has invited feedback from stakeholders till 17 Nov.

+ The US Fed cut its benchmark interest rate by 0.25% to a range of 3.75% to 4%.

+ The US granted India a 6 month sanction exemption to continue developing Iran’s Chabahar Port, despite the US’s broader sanctions on Iran: Ministry of External Affairs

+ SEBI issued new norms for non-benchmark indices used for derivatives trading. The indices must have at least 14 stocks, with the top stock’s weight capped at 20% and the top 3 at 45%. Nifty Bank Index will expand from the existing 12 to 14 stocks in 4 phases by March 2026. Nifty Financial Sevices and BSE Bankex have to comply by 31 Dec.

+ India and the US signed a 10-year defense framework agreement, to expand military and technological cooperation.

+ India’s forex reserves fell by $6.92 billion to $695.36 billion in the week that ended on 24 Oct.

The information contained in this Groww Digest is purely for knowledge. This Groww Digest does not contain any recommendations or advice.

Team Groww Digest

The overfitting problem you mention is exactly why Tesla's camera-only aproach is both brilliant and risky. Their fleet data collection gives them massive scale, but edge cases like that 2016 Florida accident show the gaps in training data coverage. The parallel with quant investing is spot on - both systems excel at pattern recognition but struggle when markets or roads behave in unprecedented ways.